The Future of Spatial Computing

How will spatial computing transform the way we live, learn, and connect? Will this be a platform shift that ushers in a new era of innovation?

Spatial computing is technology that blends our physical and digital worlds in a natural way.

Spatial computing is a new ‘catch all’ word to describe augmented reality, virtual reality, and the metaverse. It’s an immersive experience that allows you to understand space, relativity and layer objects in ways that replicate our experience of physical reality.

The devices we use today - computers, phones, and TV screens, limit our inputs to what we can type or touch. Spatial computing is effectively expands computing into responsive and layered experiences, that incorporates vision, hearing, and touch.

The spatial computing revolution is gaining momentum. The global spatial computing market is predicted to grow at a CAGR of 22.3% from 2024 to 2033, reaching $815.2 billion USD1.

Meta, Alphabet, Microsoft and Amazon are all piling in to reap the benefits, and consumer demand is starting to grow. 200,000 Apple Vision Pros were sold in the first ten days after launch on January 9, 20242. This accelerated interest in immersive experiences comes after leaps made in the hardware itself (computational power and sensor technology), following years of investment from big tech players and the developments made in other areas like AI, the IoT and 5G connectivity.

Spatial Computing 101

There are a few technologies that have enabled leaps in visualisation capabilities.

Computer Vision: this takes data from cameras and sensors and uses it to capture visual information about the environment - like position, movement and orientation of objects.

Sensor Fusion: data from sensors, like cameras and LiDARS, create an accurate view of the environment

Spatial Mapping: this is used to create 3D models of an environment, which makes it easier for precise placement of digital content.

Seamless Control: rather than rely on a mouse click, we can now control outputs via simple hand and eye gestures.

These combine to make depth possible to experience. Now, we can have realistic, natural and immersive experiences with virtual objects. We can create them, move them, manipulate and hide them in a way that we can in the real world. The applications are varied and impressive; from checking out whether a new sofa fits in your living room, to learning how to perform heart surgery, to a live product demonstration of the mechanics of a jumbo jet.

As investors, our attention is turning to the different models that will emerge. Of course there’s the hardware itself, and the application layers on top.

There will be both horizontal players - that come with a broad application and work across sectors (like Meta), or vertical players that own a sector or have a specific use case (like Netflix). When we think about applications in the specific field, will the eventual winners be

horizontal platforms that support many use cases, or vertical players that own specific pillars such as medical learning, with products tailored to the institutional nuances of the medical world?

What changes are afoot

We’ve been on the journey of making experiences more immersive, sticky and memorable for a while. From the first picture movies, to videos on a smart phone to the new Apple vision pro, we’re seeking experiences as real and meaningful as our tech allows.

Within the spatial realm, we moved from Pokemon Go - that whipped kids and adults into a frenzy finding their favourite Pokemon characters jump off their phone and ‘appear’ in the real world; to Oculus Rift, used primarily by gamers who wanted virtual environments they could interact with; to Apple Vision Pro, which is showcasing the powers of immersion, beyond gaming.

Without a real reason to be, technology can get gimmicky fast. No matter the application, developers need a clear articulation of what they do and why it makes people's lives better - either at work or in play. Those that flock to create an immersive way to experience their existing product will likely see disappointment. After all, no-one is craving a deeper exploration of receiving an email! While this may be 101 of product management, it’s easy to get swept up in hype cycles and lose sight of building something that matters.

Hardware becoming more affordable

Apple Vision Pro currently retails at $3500 USD. The ‘Pro’ in the title suggests that there will be a cheaper, ‘everyday’ version coming out soon. Normally, technology prices drop noticeably every 2 years, and when that happens, we expect to see an uptick in usage. It’s also worth remembering how most technology is met with resistance before we embrace it and integrate it into our lives.

Upgraded Zoom meetings

Spatial computing allows for more powerful presence; which makes for richer, deeper and more memorable connections. This has application for both workplaces trying to foster more intimacy in the face of remote working; and also our personal relationships, helping to bridge distance, foster intimacy and augment meaning. With the cost of living forcing more families to live further apart than ever before, this matters.

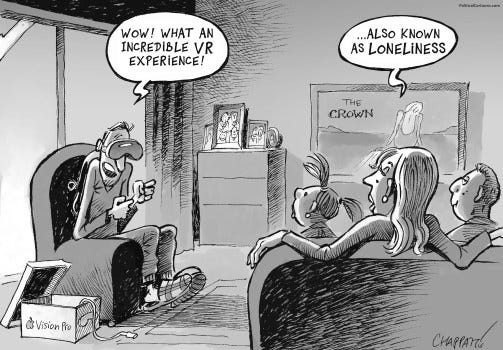

VR has sometimes been criticised for being isolating experiences, compounding fears around the damage tech can do to interpersonal relationships. The next generation of innovators must overcome this limitation to create connected experiences.

Whole-body haptic experiences

Haptic gloves, bodysuits, and similar tools simulate the feeling of actually touching a digital object. Although these solutions have seen limited adoption in recent years, that could change as new vendors enter the market with more affordable options.

It feels like mindreading

With computers and devices today, we look at something we find interesting, then move our finger or mouse to click on it. Already with the Apple Vision Pro, thanks to gaze detection, you can just look at something and it will be selected. This leap has removed a step between our intent and the outcome. If we play this out further, we might see another step removed - for example, our intent is understood even before we know it’s our intent - and the ability to react in real time is realised. To understand intent and predict our next moves with accuracy, we’ll need to see predictive AI integrated with spatial computing. This means machines will need to understand the context as well as usage patterns in order to predict in a way that feels welcome, and not intrusive. If the tech works, users will enjoy seamless experience that verges on mind reading. When it doesn’t quite work, it feels clunky and invasive.

Who Will Win

It’s hard to talk about winners in this space and not mention the big players, who have been spending like they mean it.

Meta acquired Oculus VR in 2014 for $2BN, who went on to release the headsets Oculus Rift and Oculus Quest, as well as the VR content and gaming engine Oculus Studio. The company’s rebrand to ‘Meta’ in 2021 is a powerful statement about their intent to win the spatial race.

Meanwhile, Apple developed ARKit in 2017, which allows developers to build AR experiences for IOS devices that overlay digital content onto the real world. It has since released its Apple Vision Pro headset and posit themselves as an attractive partner for nascent spatial developers in the hope to replicate their strategy for phones; to become the ultimate app platform.

Amazon’s spatial experiments are designed to enhance the shopping experience. They developed Sumerian, which lets developers create immersive 3D shopping experiences with ease and d Amazon AR view, which enables shoppers to experience product visualisations in their physical space. They are also experimenting with their own wearable device, Echo Frames. At this point, they are smart glasses with Alexa built in and lack spatial features. However, it could become an entry point into the space.

Finally, Microsoft have developed their own headset, called HoloLens, that allows users to engage with holograms overlaid on the real world. On top of this, they have built apps and platforms of their own. Like the accessibility app, Seeing AI, that uses computer vision to describe the world to visually impaired people. It can read text, identify objects, recognise faces and even interpret emotions. Or Azure and Windows Mixed Reality Services, that helps developers build AR experiences and games for Windows products. Additionally, they have made some notable acquisitions in 2016/2017, like Altspace VR (now part of the mixed reality division), Simplygon and Minecraft Edu.

Improved UX

Early users seem to agree that right now, the primary use case is the ability to seamlessly toggle between your watch, phone, and laptop in an immersive way, at your desk.

While this might not be the future we had in mind, there’s hope that simple augmentation of day to day life will emerge. For example, everyday interactions could become enhanced, with digital information overlaid into the real world making everything from real time navigation that helps you figure out a new environment with ease, to language translation when you’re travelling, to relevant contextual information appearing as you buy something in store. Take immersive fitness, like Minimis technology, that make glasses with AR functionality improves safety, while enhancing the rider’s experience.

Immersive learning experiences

The implications for learning are immense. Being able to experience lessons in immersive, dynamic and layered environments will accelerate knowledge transfer, while also making the lessons more indelible. However, it’s hard to see who will pay for it. Schools, universities and learning institutions are often budget constrained and struggling to keep pace with change - as well as their role in the modern education landscape. Cracking the education system means finding a business model that works.

Democratising healthcare

Spatial has the power to transform healthcare; from medical training, to remote consultations, to machinery demonstrations and experiential rehabilitation programs. Each of these have the ability to improve outcomes and broaden reach while stripping costs out.

As a result, there’s a level of democratisation in reach. Imagine living 1000km for a major hospital and still being able to receive a niche surgery by your local doctor, guided by an expert surgeon, who is able to ‘be’ in the operating theatre with you.

Enhancing sales

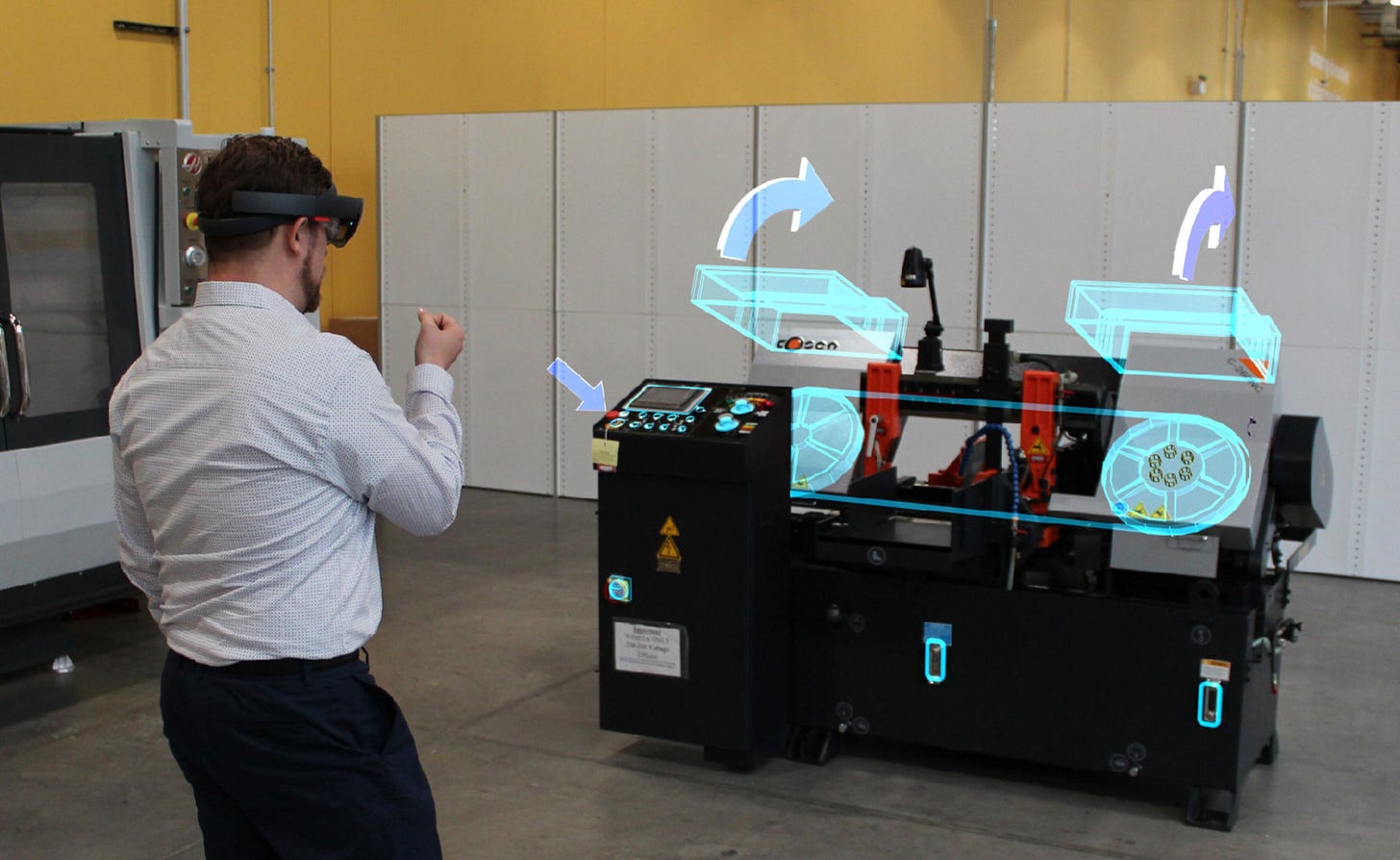

The sales process stands to be accelerated by demonstrations that allow the potential buyer to see a product in situ, inspect its inner workings, see its component parts and learn how it works. This is useful for a range of sales applications, from buying furniture, to buying cars, to booking a holiday, to industrial machinery, hospital equipment and even architecture designs. 3D modelling has been an expensive and time consuming strain on architects for years; and can now be delivered by software that turns a CAD file into a 3D model, ready for interaction.

Training and collaboration

Imagine being able to collaborate with engineers in real time, with a shared 3D view of what you are building. Compared to reading a brochure, or watching a video, the richness that comes from isolating component parts, watching their movements, annotating the specifics and watching how machinery works is immense. Not only does this speed up product development time, but assists in training teams and accelerating down time needed for repairs.

Captivating marketing

In our article on the future of DTC, we spoke about the changing role of retail and its increasing focus on creating immersive, theatrical experiences that dazzle - and warrant a selfie. Spatial can build on this foundation, allowing marketers to offer intimate experiences to both loyal fans and brand newcomers. Imagine being able to accompany Ferran Adrià on a spice tour in a Moroccan souk, or take a stroll down the streets of Paris alongside Coco Chanel with the 1920s in full swing, or be a fly on the wall at the Ferrari team’s design meeting. Technology has already given rise to superfan communities and spatial will allow this to go deeper.

Enriched entertainment

For many, their first exposure to spatial will be through the lens of entertainment, whether gaming, movies or TV shows. It’s not hard to see why an audience experience where the content is even more real is desirable. Despite the current hardware barrier, this is likely where we will first see mass adoption. However, it’s likely that the way we tell stories will change. As spatial makes it possible to meet, interact and immerse yourself into a new world, we are no longer bound by the confines of linear storytelling, or stories with one fixed outcome. In essence, we are moving from spectator to participant; which gives new meaning to the word immersive. On top of this, live events, concerts and sports will take place in virtual arenas, with the joy of being able to attend with friends and family from all over the world commonplace.

A new era of gaming

Gaming has been an early experimental playground for spatial computing. Gaming has become more immersive (thanks to AR/VR and haptics), with more interaction, more sociability and more responsiveness to local environments. For example, Supercell created Clash Quest AR that invited players to stage battles on real world surfaces for a richer experience. The developments are allowing the world to become one big game.

AI metaverse

Spatial creates immersive environments where people can interact with each other, in a whole new context. With avatars that can mimic real time communication and collaboration, applications are growing beyond gaming and entertainment to reach the workplace. Virtual HQ’s are up and running at places like Meta, Roblox and Epic Games. As our ability to merge physical and digital continue to develop, and avatar functionality improves; meeting in metaverses could become more common.

Who’s Making Waves

JigSpace

JigSpace is a comprehensive technology platform that seamlessly enables the creation, sharing, and viewing of 3D presentations known as 'Jigs'. Very simply, a user can upload a CAD file and it automatically converts into a 3D model that you can tinker with, in real time. With an immersive, animated and detailed 3D ‘jig’ in front of you, the use cases are many: from understanding how equipment and machinery works, to training and education, to real life sales demonstrations - and even immersive marketing experiences.

Starline

Project Starline is an experimental video communication method currently in development by Google that allows the user to see a 3D model of the person they are communicating with. The goal of starline is to make people feel like they are there, together - and intended applications range from better workplace communication to closing the distance between families scattered around the world.

Niantic Labs

The makers of Pokemon Go generated US $670 million in 2023. With the help of their community, Niantic Labs want to map the world “to the centimetre” so that developers can anchor digital content into the physical world. Their goal is to foster connectivity and bring people in communities closer. The base product would enable the world to become one giant game of pokemon go.

Lumus

Lumus develops transparent display technology for AR glasses. Their displays can overlay contextual digital content superimposed onto a real world view. The applications are broad, but include trainings (medical, pilot and more), fitness, physio and coaching as well as workplace communications like holographic calls and real time project collaboration. They believe their technology will make mobile phones redundant.

Scope AR WorkLink

This AR platform for enterprise applications focuses on remote assistance and trainings. It allows users to create and share AR instructions and guides, enabling remote workers to receive real-time guidance and support through AR glasses or smartphones.

Blippar

This augmented reality app combines computer vision with AR to recognize and interact with physical objects and images. Users can point their device's camera at various items, such as products or advertisements, to unlock additional content and experiences.

AmazeVR Concerts

This app is designed give music fans a front-row seat to concerts, with live action 3D footage of their favourite artists, in beautifully rendered virtual environments. This tool can democratise access and overcome barriers of geography, and in time, affordability.

Healium

This mindfulness app describes itself as a' ‘drugless solution for burnout’. It takes a new approach to meditation, with AR and VR experiences, powered by your body’s electricity. As you reach states of focus or quiet of the mind, the bio and neuro feedback wearables pick up pattern changes and power the meditation experience forward. All data is captured on a dashboard so you can train your brain to manage anxiety and focus on an ongoing basis.

10 years from now

In the not too distant future, we can expect to see seamless integration of digital experiences and information into the physical world. The implications are far-reaching, impacting how we work, learn, communicate, consume and unwind.

Retail experiences will continue to evolve with virtual-try ons and in-home visualisation diminishing the need to visit a store. At work, we can expect enhanced collaboration, with virtual workspaces allowing real time modelling, presentations and meetings to close geographical distances and increase intimacy. These signals point to a more empowered remote workforce, allowing people to achieve greater work life balance by choosing where they want to live.

How we get around will change too. With AR overlays and VR simulations, town planners and architects will be able to pressure test the impact of their visions before implementation. At the same time, access to real time information should ease traffic and congestion. Further, real-time data about air quality, pollution levels, and weather conditions will help us make informed decisions in the moment.

On the medical front, we have high hopes for the role of spatial to democratise healthcare access and affordability. With advanced medical training, surgical simulation and telemedicine consultants, the implications are immense. We see medical students able to receive better, more hands-on training before they graduate; and the ability for practitioners to deliver better care, more efficiently and remotely. Beyond the medical classroom, applications to learning are exciting and far-reaching. Imagine being able to explore the history of your neighbourhood with digital landmarks, get a virtual tour of the solar system, conduct virtual science experiments or meet and chat with Charlotte Bronte. Powered by AI, these experiences will be tailored to individual learning needs and further increase effectiveness. We will also see students from all different pockets of the globe brought together in virtual classrooms, allowing everyone to benefit from the powers of diversity.

50 years from now

Talking about the future is always challenging, especially when the subject matter is evolving so rapidly. Broadly, we see three sweeping areas of change that spatial unlocks in the far future.

From phones to contact lenses: We’ll ditch these intrusive devices and instead rely on contact lenses (having moved on from glasses) that augment reality and deliver immersive experiences. They’ll be able to seamlessly annotate, explain, interact and transact in the real world in a manner far less disruptive to flow than pulling out a phone.

Brain Computer Interfaces (BCI) Meet Spatial: In the future, we see spatial working with BCI’s to deliver immersive experiences that respond to your bio and neuro information, in real time - with the goal of helping you make better choices. Imagine being diverted to walk through a park to help regulate your nervous system, or receiving suggestions on what to order off a menu based on your metabolic information, or receiving live performance coaching mid-tennis match.

Avatars Abound: Flash forward 50 years and we’ll be interacting with avatars in a way that feels indistinguishable to our human relationships. We’ll have AI friends, be able to take a tour of the Colosseum with an AI tour guide, have significant romantic relationships and AI celebrity guest lecturers teaching university curriculum. We’re already seeing the foundations of this bubble up. Artist FKA Twigs recently created a digital twin to talk to press and engage with fans so she can spend more time making music. Meanwhile LinkedIn founder Reid Hoffman trained an AI algorithm using articles, blogs, podcasts and books he’d crafted to power his own avatar. Then, in a Black Mirror twist, ‘real’ Reid interviewed ‘avatar’ Reid with the goal of uncovering insights into his own perspectives, arguments and persuasion techniques, by being on the receiving end.